Fueled by access to ever-increasing computational power, the past few decades have seen an explosion in Artificial Intelligence (AI) capabilities and applications. Today, AI is used in everything from image and speech recognition, to recommendation systems, to biomedical informatics to self-driving cars. Recently, various cyber security vendors are adapting "AI Technologies" in their products in order to improve the detection rate of malware and attacks. In particular, AI is expected to slowly replace the old-style signature-based detection of malware. Signature-based detection has proved to be ineffective against today's "one-million-new-samples-per-day" malware variants. But what does it really mean to use AI in detection of attacks and malware; can it really live up to its promises?

AI focuses on malware, rather than exploits

AI focuses mainly on the malware itself. It scans a file to determine if it includes suspicious patterns of CPU instructions or API imports. It also can check at runtime whether the process is performing malicious operations by analyzing its memory and I/O operations. However, before the malware is delivered to the computer, there is a phase of exploitation. This phase may involve, for example, executing malicious shellcode in the browser or exploiting Adobe Reader or Microsoft Office vulnerabilities.

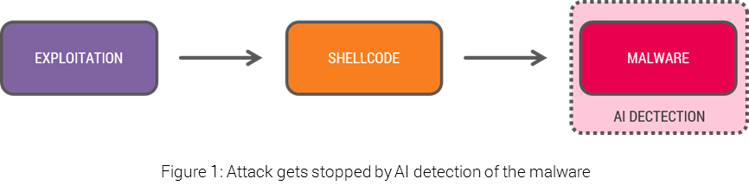

This initial phase of exploitation is where the attacker gains a foothold in your system, hence the most dangerous one. Generally, this stage involves minimal memory, disk or I/O operations; simply not enough to tag it as malicious by AI techniques. Artificial Intelligence for security relies on catching the malware itself at a later stage, once it begins to operate in the system (Figure 1).

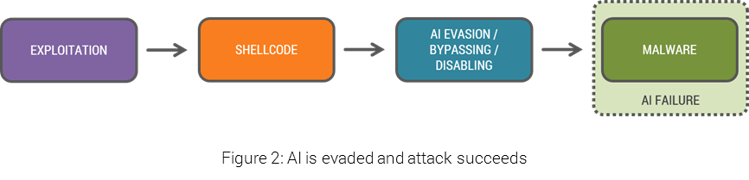

As AI detection becomes more prevalent, malware will likely evolve to evade AI products at the exploitation/shell stage, before they have a chance to raise any alert. Malware may avoid AI detection by manipulating the AV, operating at the kernel level, changing its behavior and other known and yet-to-be-developed techniques (Figure 2).

AI certainly provides exponentially better malware detection than signature-based solutions, but PREVENTING an attack at the very initial step is crucial for real protection.

AI doesn't stop 0-days and advanced threats

Different vendors use different AI methods. But whether simple machine learning, genetics algorithms, deep-learning or neural networks, all approaches have in common that they are based on an experience from the past. To put it simply, AI is based on learning from past malware about what malware looks like and how it behaves. 0-days and advanced threats are – by definition – something new. APTs are armed with novel anti-forensic and evasion techniques, new methods to invoke APIs and innovative techniques to access system resources. While some APT behaviors might be similar enough to past events that AIs can recognize them, completely new techniques have no 'similar past event'. Real protection against sophisticated, advanced threats must not rely on prior malware or prior attacks.

AI implies overhead and performance penalty

AI detection is based upon analyzing a wide range of events in the system. These events may include monitoring disk and file operations, API hooks, resource access tracing, process creation and termination trace, and so on. Such heavy monitoring of the OS and its processes consumes runtime resource and necessarily causes a degradation of the system performance.

Deep AI requires cloud

Deep AI involves massive data analytics engines which include parameters of millions of malware and attacks from the past. Such engines usually sit at the server or cloud level, and data is sent from the endpoint to the cloud. The cloud engine then decides whether 'it’s malware or not'. This requirement for permanent connectivity may not be an acceptable condition in certain scenarios, e.g. separated networks. In addition, exposing logs of events from a PC to an external entity may raise privacy, regulatory or legal concerns.

AI false positives

AI and machine learning are not deterministic. They estimate how much this looks like an attack or behaves like malware. This naturally includes incidents of 'false alarms' and 'false-positives'. Security vendors know that a high rate of false-alarms renders the product unusable. Therefore, many AI solutions require a process of fine-tuning, specialized configuration and adjustment for each organization, sometimes at different networks within an organization. In addition to the aforementioned maintainability issue, the tradeoff between false-positive and usability plays into the hand of attackers. Attackers use the 'gray' area between malicious and benign to slip in, and remain undetectable by AI solutions.

The Upshot

Malware poses (and will increasingly pose) real challenges to AI-based detection. And while AI has expanded upon its signature-based predecessors, in the end it’s just another replacement with, ultimately, the same limitations.

.png?width=571&height=160&name=iso27001-(2).png)